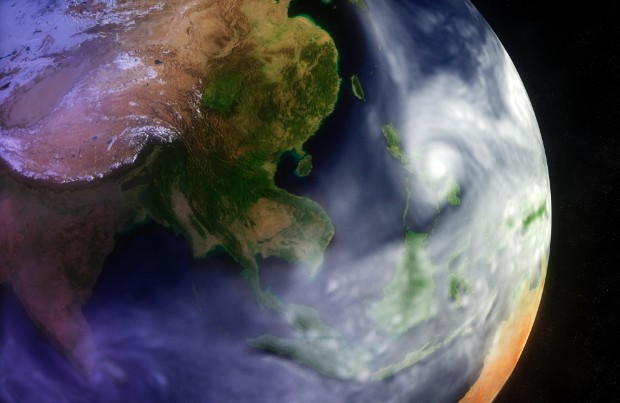

Virtual Climate:

Building better climate models is becoming more important, as scientists work to predict the potential effects of a warming planet. Supercomputers are already integral to our understanding of atmospheric changes. "Whenever you see forecasters on TV, that weather movie took hours and hours to render on a supercomputer," says Sumit Gupta, a general manager at Nvidia's accelerated computing unit. (Nvidia's chips help power Titan.) But the best supercomputers today fall short of researchers' goals. If you think of the globe as an image, the best supercomputers can only render pixels the size of 14 square kilometers. An exascale computer could bring that down to one square kilometer. The effect? Scientists could see the impact of minutely detailed climate factors such as individual cloud formations and ocean eddies.

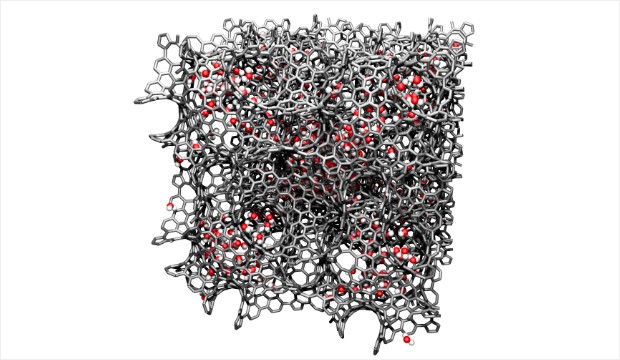

Digital Cells:

Much of the future of pharmaceuticals is in algorithms, not petri dishes. Researchers will develop drugs based on programs that predict how chemicals will interact with the body. Titan, for example, will advance these digital drug tests, says Jack Wells, the director of science at the Oak Ridge Leadership Computing Facility. It will let researchers solve equations that apply a technique called molecular dynamics. In this approach to solving problems in biology, chemistry, and materials sciences, scientists model the movement of individual atoms or molecules, factoring in the various forces exerted on them. Unfortunately, even with today's most powerful computers, scientists can only apply molecular dynamics to small clusters of atoms. A human cell, on the other hand, is composed of billions of atoms that all interact, and it is too complex for current computers to model. The hope is that future supercomputers could handle whole cells.

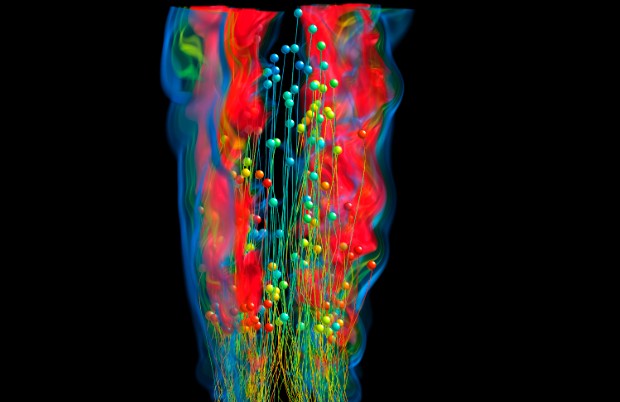

Future Fuel:

The equations behind burning fuel are extremely difficult to understand on the micro-scale. Scientists studying combustion must rely on guesswork. With Titan, scientists can model the chemical reactions for combustion of relatively simple fuels like alcohol and butanol. But because gasoline and biodiesels are complex fuels -- the molecules inside are longer and thus trickier to model -- more and more powerful supercomputers will be required to parse them.

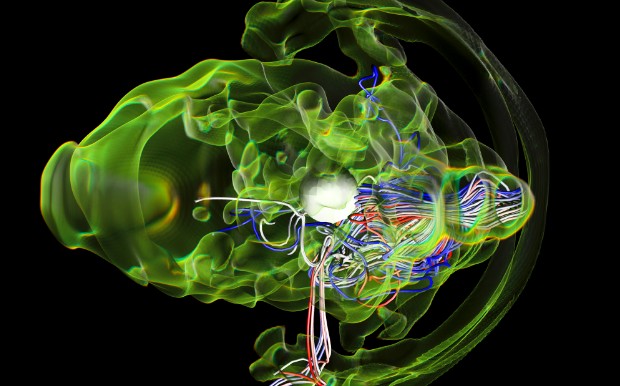

Inside Supernova:

More powerful supercomputers could shine a light on some of the most foreign phenomena in the universe. For example, scientists in the future may be able to model the awesome forces that interact when a star explodes. Those events, called supernovae, often spew enough energy and light to outshine an entire galaxy. Supernovae involve not just astrophysics, but chemical and nuclear reactions. Running programs that includes all the sciences at play would require an exaflop of data. With that much power, the next generation of supercomputers could bring the mysteries of deep space closer to home.

Via: "CNN"

No comments:

Post a Comment