By Michael Keller,

“In the human-computer interaction community, the general notion we’re working under is reality-based interfaces,” says Dr. Robert Jacob, a Tufts University computer science professor studying brain-computer interfaces. “We’re trying to design what is intuitive in the real world directly into our interaction with computers.”

He says intuitive interaction is apparent with the flowering of smart devices: squeezing the thumb and pointer finger together on a touchscreen to zoom in and spreading them out to zoom out, along with various augmented reality apps.

But such newfound intuitive functionality, amazing as it is, is just the beginning of what’s possible, says Chris Harrison, a doctoral candidate at Carnegie Mellon’s Human-Computer Interaction Institute.

“Imagine if the only thing you could use in the real world to operate things were a touch or a swipe,” says Harrison. “The world would be completely unusable. We knock. We scratch. We rub. We open bottles and we move things around. How do we bring that type of richness to the interface?”

Harrison’s work focuses on extending mobile interaction and input technologies. He worked on a project called TapSense, which allows a touchscreen to recognize the difference between a user’s knuckle, fingertip, pad and nail, and to use each type of contact to perform a different function.

“The next obvious thing that we’ll see with human-computer interaction is a move beyond multitouch,” Harrison says. “We won’t come to terms with the small space to use on phones and we’ll move to projectors.”

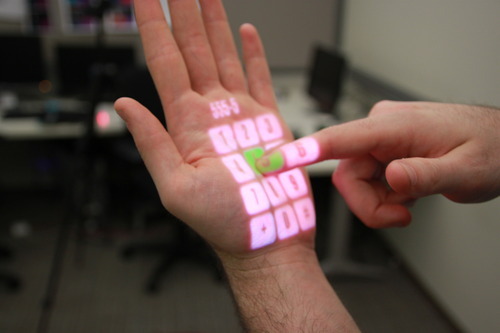

His idea is to break free from the constraints of the display screen altogether. He’s been busy turning such an idea into reality with a project called OmniTouch, which is a portable device that projects interactive graphics out onto a wall, table or the user’s body. The projection is meant to serve as an extension of a multitouch smart device.

Several products available or under development from others also demonstrate that using a finger or two to touch a tiny mobile screen isn’t the pinnacle of human input technology. They show that a fuller use of hand gesturing, a jump away from physical contact with the computer and employment of the space around a user are definitively in the offing for the next generation of human-computer interaction.

One information visualization company, Oblong, has developed what it calls a spatial operating environment, which fans of Minority Report should recognize.

Another, Leap Motion, has created a $70 device that interprets a user’s gestures within an eight-cubic-foot space, allowing the person’s movements to interact with the computer without touching it.

And researchers are working on different ways to glean input data, from Microsoft Kinect’s infrared laser depth finder and audio recognition software to a research project called SoundWave, which generates inaudible tones and uses the Doppler effect to sense gestures.

But not all of the work to make interactions with computers easier involves intentional actions or body movements. In fact, Jacob’s research at Tufts University is seeking to create interaction with a computer without the user knowing it.

His research focuses on lowering the cognitive demand of interacting with computers. His team is measuring blood flow changes in the brain to detect when a computer user is overloaded with work.

“When the brain does something hard, it sends out a request to the body that says, “Hey, I need more blood up here,’” Jacob says. “We shine a light into a person’s head and a sensor measures how much comes back out. That data can be used as real-time input to direct a person’s interaction with a computer.”

He says a computer can use the information from this technique, called functional near infrared spectroscopy, to adapt the user’s interface and manage workload. Imagine this: A pilot is given a fleet of five unmanned aerial vehicles to fly at once. Using the device, called Brainput, and a “heavy dose of machine learning,” he says, the computer would be able to understand when the pilot is working too hard to fly the fleet and transfer control of one or two of the vehicles to another person automatically. While the pilot is doing something less demanding, like flying the UAVs in a straight line to a destination, he could operate more of them.

“We’re coming at it as human interaction designers and asking how can you make a good interface without actively inputting data into the computer,” Jacobs says.

While human-computer interaction is still in its early stages, there are several input technologies that are developing—hand and body gesturing, natural language parsing, eye movement tracking and machine learning to give devices context awareness.

Carnegie Mellon’s Harrison sees the development of these technologies as complementary and, ultimately, synergistic.

“Pulling all this rich sensing and input together, it’ll sort of feel like AI [artificial intelligence],” he says. “There are a lot of pieces that need to come together, but it will be here in our lifetime.”

Top Image: An interface projected onto the user’s hand by OmniTouch, a novel wearable system that enables graphical, interactive, multitouch input on arbitrary, everyday surfaces. Photo courtesy Chris Harrison.

Via: "TxchNologist"

No comments:

Post a Comment